Vision

The motivation for Luminide came from our own personal experience over the past 10 years, building technologies that span the entire AI hardware/software stack. We were two of the first employees at Nervana, an AI hardware accelerator startup, which was later acquired by Intel. We developed AI algorithms, frameworks before TensorFlow or Pytorch existed, compilers, GPU kernels, all the way down to drivers. We published numerous papers and were granted multiple patents. And we built AI models. Lots and lots of AI models, used across a wide variety of domains including oil and gas, underwater exploration, and agriculture. Anil even has Kaggle grandmaster status, indicating he’s one of the top model designers in the world.

During this time we used a lot of great tools for model development. Some of our favorites include PyTorch, Scikit, Jupyter notebooks, CuDNN, and GPUs. But there was one tool missing -- a simple, unified IDE for AI model development. This page motivates why such a tool is needed and how we are building it.

Data scientists should spend their time on data science

While building AI models, we were frustrated with all the time we were spending on everything but data science. This includes doing our own devOps -- downloading libraries, installing drivers, navigating complex dashboards, troubleshooting issues. And we repeated the same tedious tasks over and over while developing a model -- running numerous experiments, recording hyperparameters, tracking code changes, sifting through log files.

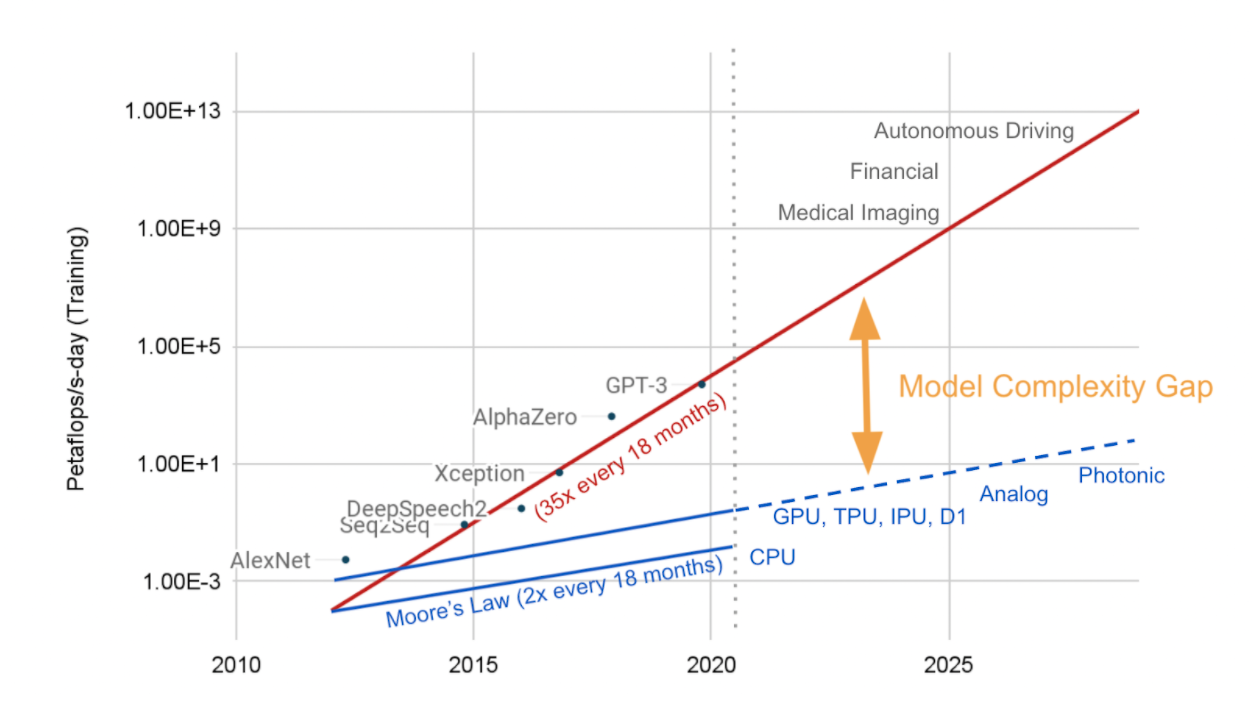

Model complexity gap

As models grew larger and more complex, we ran into the same time and cost issues as the rest of the industry, something we call the model complexity gap. While hardware performance is following Moore’s law and doubles every 18 months, in that same time model complexity increases 35x. The time estimate to train a large model such as GPT-3 with 175 billion parameters is 355 years, and the cost estimates range from $4.6-12M . And this gap will continue to grow over time.

Simply scaling hardware won’t work because not only will it drive the price sky high, but it will result in an enormous carbon footprint. Studies show that training a state-of-the-art NLP model emits over 78,000 pounds over carbon emissions, which is equivalent to 125 round trip flights between New York and Beijing. (Another quote here about the cost).

Therefore, new software solutions will be an essential piece to solving this puzzle. The fundamental challenge is how to achieve the same model accuracy with significantly less computation. This will save time and money locally, and reduce the carbon footprint globally.

A Simple, Unified IDE for Model Development

We heard the same frustrations from data scientists around the world, about tools being too complicated and cumbersome and models taking too long to train. We anxiously anticipated as new tools were release, but after 10 years we still hadn’t found what we were looking for.

A focus on model development: Instead, there were end-to-end platforms, one-size-fits-all solutions, or tools for other aspects of the data science lifecycle.

Uniformity: There were lots of great tools and libraries out there -- code editors, notebooks, open-source libraries, and standalone tools. But using these required installing lots of software, resolving dependencies, navigating complicated dashboards to reserve cloud GPUs, or installing our own hardware and drivers.

Simplicity: Getting started with a monolithic, enterprise platform is a daunting task. It takes significant time and energy to install software, learn a new ecosystem, read documentation, etc.

This is why we built Luminide, a simple, unified IDE for model development. The Lumindie IDE was designed bottom-up specifically for model development. Whether the user starts by choosing an existing, state-of-the-art model or designing their own from scratch, Luminde streamlines and accelerates the development process. Experiment to see if new layers make an improvement. Fine-tune with domain specific data. Determine which data augmentations give you the best bang for your buck. Hyperparameter tune to get that last bit of accuracy.

We reuse as many of the great open-source software and libraries as possible, e.g. Docker, Kubernetes, PyTorch, TensorFlow, Jupyter Lab, Jupyter Hub. Luminide’s implementation consists largely of glue-code to integrate these components.

Early Ranking

Another advantage of a unified IDE is the ability to design new, more complex optimizations. These optimizations help address the model complexity gap by reducing the amount of computation required during training.

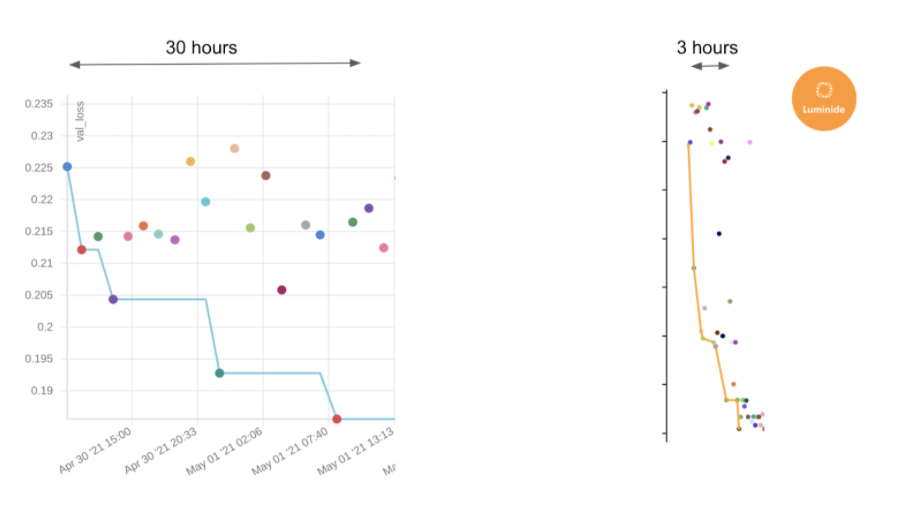

One optimization we’ve developed uses predictive modeling to determine the relative outcome of a training run after only a few epochs. For example, instead of running 30 epochs for a training run, it may only require 3. The relative accuracy of a model training with respect to other training runs can be used by data scientists to do rapid prototyping and fast hyperparameter tuning. We call this optimization early ranking.

Note, the performance gain early ranking achieves is multiplicative with other optimizations. For example, we start with a state-of-the-art Baysian optimizer and gain an additional 10x speedup. In a head-to-head hyperparameter tuning comparison, Luminide achieved the same model accuracy in 3 hours that it took the current best-in-class software 30 hours to achieve.

Optimizations like this are complicated. Early ranking requires building a predictive model ahead of time, using it during training, generating additional run-time data, and smoothing out run-time data.

And all of this needs to happen without requiring any additional work from the data scientists, such as modifying or instrumenting their code -- that’s exactly the type of burden we’re trying to free the data scientists from.

Looking Ahead

Early ranking is just the first optimization we’ve implemented with our IDE. Initial results with additional optimizations involving data augmentation and Network Architecture Search already look promising. We’re excited to continue exploring these new areas and once we’ve finished a proof of concept, adding them into our product.

Automatic Hyperparameter Tuning and AutoML are two fields which have shown a lot of promise in recent years yet today are still only used by a fraction of data scientists. For example, even the most popular AutoML tool is used by less than 25% of data scientists. Reasons for this include 1. they’re too complicated to use and 2. their large search spaces take too much time and/or cost too much money. With Luminide’s ease of use and time/cost saving optimizations, we hope to make these promising technologies mainstream.

We believe the key to a great AI model development tool is a simple, unified IDE, which makes it as easy to use as possible and enables powerful, system-wide optimizations. We’ve already added completely automatic features to Luminde such as experiment tracking, one-click features such as connecting to GPU servers, and implemented our first system-wide optimization, early ranking, which achieves a 10x performance boost. And we’re looking forward to continuing to improve and enhance our system, allowing data scientists to finally focus on data science!

Sincerely,

Luke and Anil

Luminide co-founders